Papers that I should read today - Dec 6th, 2023

Modelling and measuring human movement, collaborating with robots.

Hello,

this is the first issue of the newsletter in which I will try to put on paper (or better, on-screen) all the scientific papers that I should read today for my work or personal development.

I am an assistant professor in Biomedical Engineering based in Rome. I teach Neural Engineering and work on mathematical models for human motor control and biomedical signal processing, so most of the papers I should read will be on this topic. I will also try my best to include at least one paper outside of my main research interests.

Paper 1 - Frictional internal work of damped limbs oscillation in human locomotion

Human joints are not ideal, and as is unfortunately always the case in the real world, friction plays a critical role in most of the motor tasks that are realised on a day-to-day basis. In this 2020 paper by Minetti et al., some mathematical models for human (and generally animal) locomotion are optimised for taking into account energetic mechanisms related to friction at the joint level, to provide a more detailed energetic characterization of motion.

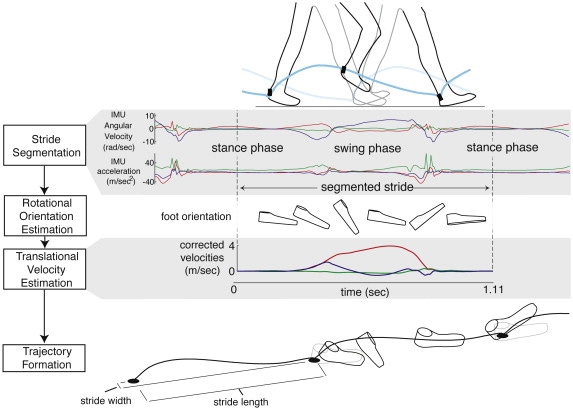

Paper 2 - Measurement of foot placement and its variability with inertial sensors

When one wants to track the 3D movement of a body segment, every accurate system needs some kind of controlled camera setup or an accurate mathematical modelling of the movement that is executed. Since both of those conditions are undesirable when analysing free tasks, using inertial sensors can represent a viable solution for fast and acceptable motion tracking. In this 2013 paper, Rebula et al. aim to characterise the accuracy of foot placement measurements during gait, describing the feasibility of this kind of experimental setup in clinical and rehabilitation scenarios.

Paper 3 - Human-Robot Interaction With Robust Prediction of Movement Intention Surpasses Manual Control

When a human interacts with a robot, it is not ensured that the robot knows what the optimal interaction modality is. In this collaboration, the cognitive ability of the human is combined with the physical capabilities of the robot, with the ultimate aim to enhance the productivity and safety of the task execution. In this 2021 paper, Veselic et al. propose a context- and user-aware modality for controlling the robot, demonstrating how the performance of the collaboration, when the robot is aware of both the context and the user intention, surpasses the one that is reached when full manual control is employed.